How NoSQL will meet RDBMS in the future

The NoSQL versus RDBMS war started a few years ago and as the new technologies are starting to get more mature it seems that the two different camps will be moving towards each other. Latests example can be found at http://blog.tapoueh.org/blog.dim.html#%20Synchronous%20Replication where the author talks about upcoming postgresql feature where the application developer can choose the service level and consistency of each call to give hint to the database cluster what it should do in case of database node failure.

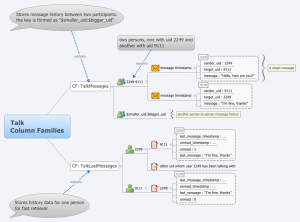

The exact same technique is widely adopted in Cassandra where each operation has a consistency level attribute where the programmer can decide if he wants full consistency among entire cluster or is it acceptable if the result might not contain the most up to date data in case of node failure (and also gain extra speed for read operations) . This is also called Eventual Consistency.

The CAP theorem says that you can only have two out of three features from a distributed application: Consistency, Availability and Partition Tolerance (hence the acronym CAP). To give example: If you choose Consistency and Availability, your application cannot handle loss of a node from your cluster. If you choose Availability and Partition Tolerance, your application might not get most up-to-date data if some of your nodes are down. The third option is to choose Consistency and Partition Tolerance, but then your entire cluster will be down if you lost just one node.

Traditional relation databases are designed around the ACID principle which loosely maps to Consistency and Partition Tolerance in the CAP theorem. This makes it hard to scale an ACID into multiple hosts, because ACID needs Consistency. Cassandra in other hand can swim around the CAP theorem just fine because it allows the programmer to choose between Availability + Partition Tolerance and Consistency + Availability.

In the other hand as nosql technology matures they will start to get features from traditional relation databases. Things like sequences, secondary indexes, views and triggers can already be found in some nosql products and many of them can be found from roadmaps. There’s also the ever growing need to mine the datastorage to extract business data out of it. Such features can be seen with Cassandra hadoop integration and MongoDB which has internal map-reduce implementation.

Definition of NoSQL: Scavenging the wreckage of alien civilizations, misunderstanding it, and trying to build new technologies on it.

As long as nosql is used wisely it will grow and get more mature, but using it without good reasons over RDBMS is a very easy way to shoot yourself in your foot. After all, it’s much easier to just get a single powerfull machine like EC2 x-large instance and run PostgreSQL in it, and maybe throw a few asynchronous replica to boost read queries. It will work just fine as long as the master node will keep up and it’ll be easier to program.