Running Kubernetes at home for fun and profit.

Running a Kubernetes cluster at home might sound insane at first. However if you do have the habit to run some software on a few Raspberry Pi’s and you are already familiar with Docker you can see some clear benefits from transferring everything onto Kubernetes.

Kubernetes gives you a clear and uniform interface on how to run your workloads. You can easily see what you are running as you no longer can easily forget that you had a few scripts running somewhere. You can automate your backups, easily get strong authentication to your services you run and it can be really easy to install new software onto your cluster using helm.

This article doesn’t explain what Kubernetes is or how to deploy it as there are many tutorials and guides already in the Internet. Instead this article shows how does my setup looks and gives you ideas what you can do. I’m assuming that you already know Kubernetes on some level.

The building blocks: hardware.

I’m running Kubernetes in two machines: One is a small form factor Intel based PC with four cores and 16 GiB of ram and a SSD and the other is a HPE MicroServer with three spinning disks.

The small PC runs the Kubernetes control plane by itself. I installed a standard Ubuntu 18.04 LTS. I let the installer partition my disk using LVM and gave ~20 GiB for the root and left the rest ~200 GiB unallocated (I’ll come back later on this). After the installation I used kubeadm to install a single node control plane.

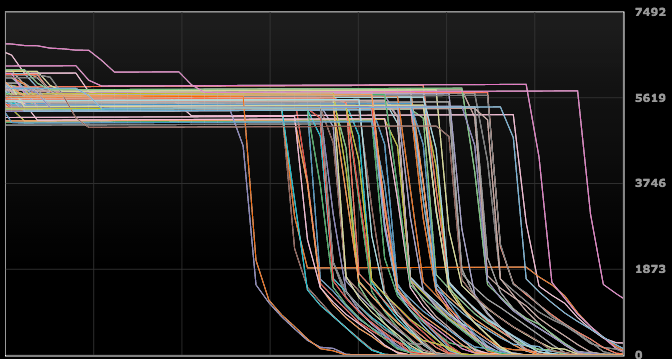

The MicroSever is a continuation of my previous story and its main thing is to run ZFS. It has three spinning disks in a triple mirrored configuration. Its main purpose is to consolidate and archive all the data for easy backups. When this project started I used kubeadm to install a worker node into this server and join it into the cluster.

The building blocks: storage.

Kubernetes can easily be used to run ephemeral workloads, but implementing a good persistent storage solution is not hard. I ended up with the following setup:

- Let the PC SSD work also as a general purpose storage server.

- Allocate the remaining disk from the LVM into a LV and create a zfs pool onto it.

- Create a zfs filesystem onto this zpool (I call mine data/kubernetes-nfs)

- Run the standard Linux kernel NFS server (configured via ZFS) to export this storage to the pods.

- Use nfs-client-provisioner to create a StorageClass which automatically provisions PersistentVolume objects on new PersistentStorageClaim into this NFS storage.

- Use sanoid to do hourly snapshots of this storage and stream the snapshots into the MicroServer as backups.

This setup gives enough fast storage for my requirements, is simple to maintain, is foolproof as the stored data is easily accessible just by navigating into the correct directory in the host and I can easily backup all of it using ZFS. Also if I need a big quantity of slower storage I can also nfs-mount storage from the MicroServer itself.

When I install new software to my cluster I just tell them to use the “nfs-ssd” StorageClass and everything else happens automatically. This is especially useful with helm as pretty much all helm charts support setting a storage class.

Other option could be to run ceph, but I didn’t have multiple suitable servers to run it and it is more complex than this setup. Also kubernetes-zfs-provisioner looked interesting but it didn’t feel mature enough at the time of writing this. Or if you know you will have just one server you can just use HostPaths to mount directories from your host into the pods.

The building blocks: networking.

I opted to run calico as it fits my purpose pretty well. I happen to have an Ubiquiti EdgeMax at my home so ended up setting Calico to bgp-peer with the EdgeMax to announce the pod ip address space. There are other options such as flannel. Kubernetes doesn’t really care what kind of network setup you have, so feel free to explore and pick what suits you best.

The building blocks: Single Sign On.

A very nice feature on Kubernetes is the Ingress objects. I personally like the ingress-nginx controller, which is really easy to setup and it also supports authentication. I paired this with the oauth2_proxy so that I can use Google accounts (or any other oauth2 provider) to implement a Single Sign On for any of my applications.

You will also want to have SSL encryption and using Lets Encrypt is a free way to do it. The cert-manager tool will handle cert generation with the Lets Encrypt servers. A highly recommended way is to own a domain and create a wildcard domain *.mydomain.com to point into your cluster public ip. In my case my EdgeMax has one public IP from my Internet provider and it will forward the TCP connections from :80 and :443 into two NodePorts which my ingress-nginx Service object exposes.

I feel that this is the best feature so far I have gotten from running Kubernetes. I can just deploy a new web application, set it to use a new Ingress object and I will automatically have the application available from anywhere in the world but protected with the strong Google authentication!

The oauth2_proxy itself is deployed with this manifest:

apiVersion: v1

kind: ConfigMap

metadata:

name: sso-admins

namespace: sso

data:

emails.txt: |-

my.email@gmail.com

another.email@gmail.com

---

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

labels:

k8s-app: oauth2-proxy-admins

name: oauth2-proxy-admins

namespace: sso

spec:

replicas: 1

selector:

matchLabels:

k8s-app: oauth2-proxy-admins

template:

metadata:

labels:

k8s-app: oauth2-proxy-admins

spec:

containers:

- args:

- --provider=google

- --authenticated-emails-file=/data/emails.txt

- --upstream=file:///dev/null

- --http-address=0.0.0.0:4180

- --whitelist-domain=.mydomain.com

- --cookie-domain=.mydomain.com

- --set-xauthrequest

env:

- name: OAUTH2_PROXY_CLIENT_ID

value: "something.apps.googleusercontent.com"

- name: OAUTH2_PROXY_CLIENT_SECRET

value: "something"

# docker run -ti --rm python:3-alpine python -c 'import secrets,base64; print(base64.b64encode(base64.b64encode(secrets.token_bytes(16))));'

- name: OAUTH2_PROXY_COOKIE_SECRET

value: somethingelse

image: quay.io/pusher/oauth2_proxy

imagePullPolicy: IfNotPresent

name: oauth2-proxy

ports:

- containerPort: 4180

protocol: TCP

volumeMounts:

- name: authenticated-emails

mountPath: /data

volumes:

- name: authenticated-emails

configMap:

name: sso-admins

---

apiVersion: v1

kind: Service

metadata:

labels:

k8s-app: oauth2-proxy-admins

name: oauth2-proxy-admins

namespace: sso

spec:

ports:

- name: http

port: 4180

protocol: TCP

targetPort: 4180

selector:

k8s-app: oauth2-proxy-admins

---

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: oauth2-proxy-admins

namespace: sso

spec:

rules:

- host: sso.mydomain.com

http:

paths:

- backend:

serviceName: oauth2-proxy-admins

servicePort: 4180

path: /oauth2

tls:

- hosts:

- sso.mydomain.com

secretName: sso-mydomain-com-tlsIf you have different groups of users in your cluster and want to limit different applications to have different users you can just duplicate this manifest and have different user list in the ConfigMap object.

Now when you want to run a new application you expose it with this kind of Ingress:

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

annotations:

kubernetes.io/ingress.class: nginx

kubernetes.io/tls-acme: "true"

nginx.ingress.kubernetes.io/auth-signin: https://sso.mydomain.com/oauth2/start?rd=https://$host$request_uri

nginx.ingress.kubernetes.io/auth-url: https://sso.mydomain.com/oauth2/auth

labels:

app: myapp

name: myapp

namespace: myapp

spec:

rules:

- host: myapp.mydomain.com

http:

paths:

- backend:

serviceName: myapp

servicePort: http

path: /

tls:

- hosts:

- myapp.mydomain.com

secretName: myapp-mydomain-com-tlsAnd behold! When you nagivate to myapp.mydomain.com you are asked to login with your Google account.

The building blocks: basic software.

There are some highly recommended software you should be running in your cluster:

- Kubernetes Dashboard, a web based UI for your cluster.

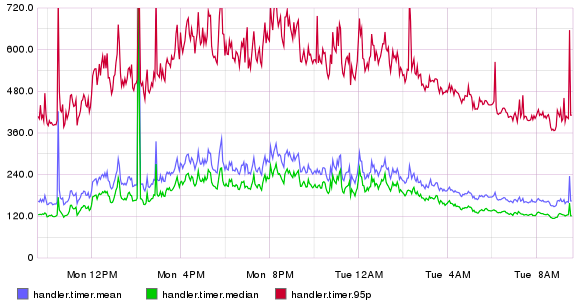

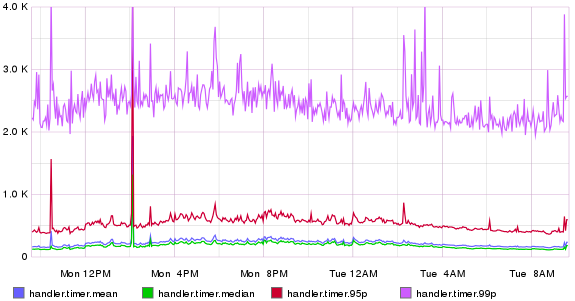

- Prometheus for collecting metrics from your cluster. I recommend to use the prometheus-operator to install it. It will also install Grafana so that you can easily build dashboards for the data.

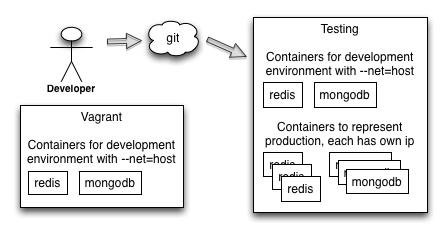

The building blocks: Continuous deployment with Gitlab.

If you ever develop any own software I can highly recommend Gitlab.com as gives you free private git repositories, has a built-in deployment pipeline and private Docker Registry for your own applications.

If you expose your Kubernetes api server to public internet (I use NAT to map port 6445 from my public ip into the Kubernetes apiserver) you can let Gitlab to run your own builds in your own cluster, making your builds much faster.

I created a new Gitlab Group where I configured my cluster so that all projects within that group can easily access the cluster. I then use the .gitlab-ci.yml to both build a new Docker Image from my project and deploy it into my cluster. Here’s an example project showing all that. With this setup I can write a new project from a simple template and have it running in my cluster in less than 15 minutes.

You can also run Visual Studio Code (VS Code) in your cluster so that you can have a full featured programming editor in your browser. See https://github.com/cdr/code-server for more information. When you bundle this with the SSO you can be fully productive with any computer assuming it has a web browser.

What next?

If you reached this far you should be pretty comfortable with using Kubernetes. Installing new software easy as you often find out there’s an existing Helm chart available. I personally run also these software in my cluster:

- Zoneminder (a ip security camera software).

- tftp server to backup my switch and edgemax configurations.

- Ubiquiti Unifi controller to manage my wifi.

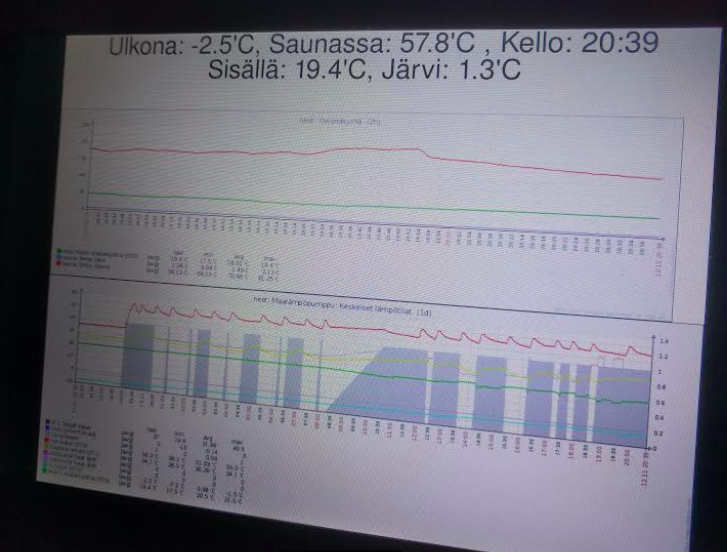

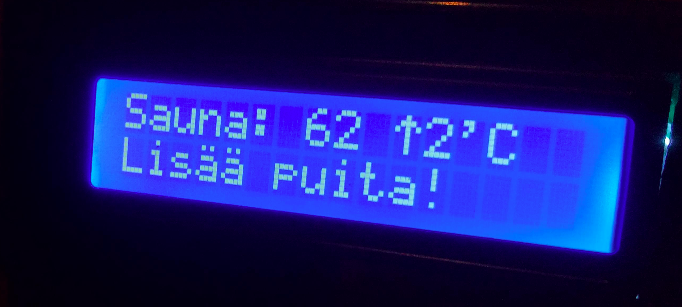

- InfluxDB to store measurements from my IOT devices.

- MQTT broker.

- Bunch of small microservices I built by myself in my IOT projects.