I just recently built a storage server based on Solaris OpenIndiana, a 2U SuperMicro server and a SuperMicro 45 disk JBOD rack enclosure. The current configuration can host 84 TB of usable disk space, but we plan to extend this at least to 200TB in the following months. This blog entry describes the configuration and steps how to implement such beast by yourself.

Goal:

- Build a cheap storage system capable of hosting 200TB of disk space.

- System will be used to archive data (around 25 GB per item) which is written once and then accessed infrequently (once every month or so).

- System must be tolerant to disk failures, hence I preferred raidz3 which can handle a failure of three disks simultaneously.

- The capacity can be extended incrementally by buying more disks and more enclosures.

- Each volume must be at least 20TB, but doesn’t have to be necessarily bigger than that.

- Option to add a 10GB Ethernet card in the future.

- Broken disks must be able to identify easily with a blinking led.

I choose to deploy an OpenIndiana based system which uses SuperMicro enclosures to host cheap 3TB disks. Total cost of the hardware with one enclosure was around 6600 EUR (2011 Dec prices) without disks. Storing 85TB would cost additional 14000 EUR with current very expensive (after the Thailand floods) disk prices. Half a year ago the the same disks would have been about half of that. Disks would be deployed in a 11-disk raidz3 sets, one or two per zpool. This gives us about 21.5TB per 11 disk set. New storage is added as a new zpool instead of attaching it to an old zpool.

Parts used:

- The host is based on a Supermicro X8DTH-6F server motherboard with two Intel Xeon E5620 4-core 2.4 Ghz CPUs and 48 GB of memory. Our workload didn’t need more memory, but one could easily add more.

- Currently one SC847E16-RJBOD1 enclosure. This densely packed 4U chassis can fit a whopping 45 disks.

- Each chassis is connected to a LSI Logic SAS 9205-8e HBA controller with two SAS cables. Each enclosure has two backplanes, so both backplanes are connected to the HBA with one cable.

- Two 40GB Intel 320-series SSDs for the operating system.

- Drives from two different vendor so that we can have some benchmarks and tests before we commit to the 200TB upgrade:

- 3TB Seagate Barracuda XT 7200.12 ST33000651AS disks

- Western Digital Caviar Green 3TB disks

It’s worth to note that this system is built for storing large amounts of data which are not frequently accessed. We could easily add SSD disks as L2ARC caches and even a separated ZIL (for example the 8 GB STEC ZeusRAM DRAM, which costs around 2200 EUR) if we would need faster performance for example database usage. We selected disks from two different vendors for additional testing. One zpool will use only disks of a single type. At least the WD Green drives needs a firmware modification so that they don’t park their heads all the time.

Installation:

OpenIndiana installation is easy: Create a bootable CD or a bootable USB and boot the machine with just the root devices attached. The installation is very simple and takes around 10 minutes. Just select that you install the system to one of your SSDs with standard disk layout. After your installation is completed and you have booted the system, follow these steps to make the another ssd bootable.

Then setup some disk utils under /usr/sbin. You will need these utils to for example identify the physical location of a broken disk in the enclosure. (read more here):

Now it’s time to connect your enclosure to the system with the SAS cables and boot it. OpenIndiana should recognize the new storage disks automatically. Use the diskmap.py to get a list of the disk identifies for later zpool create usage:

garo@openindiana:/tank$ diskmap.py

Diskmap - openindiana> disks

0:02:00 c2t5000C5003EF23025d0 ST33000651AS 3.0T Ready (RDY)

0:02:01 c2t5000C5003EEE6655d0 ST33000651AS 3.0T Ready (RDY)

0:02:02 c2t5000C5003EE17259d0 ST33000651AS 3.0T Ready (RDY)

0:02:03 c2t5000C5003EE16F53d0 ST33000651AS 3.0T Ready (RDY)

0:02:04 c2t5000C5003EE5D5DCd0 ST33000651AS 3.0T Ready (RDY)

0:02:05 c2t5000C5003EE6F70Bd0 ST33000651AS 3.0T Ready (RDY)

0:02:06 c2t5000C5003EEF8E58d0 ST33000651AS 3.0T Ready (RDY)

0:02:07 c2t5000C5003EF0EBB8d0 ST33000651AS 3.0T Ready (RDY)

0:02:08 c2t5000C5003EF0F507d0 ST33000651AS 3.0T Ready (RDY)

0:02:09 c2t5000C5003EECE68Ad0 ST33000651AS 3.0T Ready (RDY)

0:02:11 c2t5000C5003EF2D1D0d0 ST33000651AS 3.0T Ready (RDY)

0:02:12 c2t5000C5003EEEBC8Cd0 ST33000651AS 3.0T Ready (RDY)

0:02:13 c2t5000C5003EE49672d0 ST33000651AS 3.0T Ready (RDY)

0:02:14 c2t5000C5003EEE7F2Ad0 ST33000651AS 3.0T Ready (RDY)

0:03:20 c2t5000C5003EED65BBd0 ST33000651AS 3.0T Ready (RDY)

1:01:00 c2t50015179596901EBd0 INTEL SSDSA2CT04 40.0G Ready (RDY) rpool: mirror-0

1:01:01 c2t50015179596A488Cd0 INTEL SSDSA2CT04 40.0G Ready (RDY) rpool: mirror-0

Drives : 17 Total Capacity : 45.1T

Here we have total of 15 disks. We’ll use 11 of them to for a raidz3 stripe. It’s important to have the correct amount of drivers in your raidz configurations to get optimal performance with the 4K disks. I just simply selected the first 11 disks (c2t5000C5003EF23025d0, c2t5000C5003EEE6655d0, … , c2t5000C5003EF2D1D0d0) and created a new zpool with them and also added three spares for the zpool:

zpool create tank raidz3 c2t5000C5003EF23025d0, c2t5000C5003EEE6655d0, ... , c2t5000C5003EF2D1D0d0

zpool add tank spare c2t5000C5003EF2D1D0d0 c2t5000C5003EEEBC8Cd0 c2t5000C5003EE49672d0

This resulted in a nice big tank:

NAME STATE READ WRITE CKSUM

tank ONLINE 0 0 0

raidz3-0 ONLINE 0 0 0

c2t5000C5003EF23025d0 ONLINE 0 0 0

c2t5000C5003EEE6655d0 ONLINE 0 0 0

c2t5000C5003EE17259d0 ONLINE 0 0 0

c2t5000C5003EE16F53d0 ONLINE 0 0 0

c2t5000C5003EE5D5DCd0 ONLINE 0 0 0

c2t5000C5003EE6F70Bd0 ONLINE 0 0 0

c2t5000C5003EEF8E58d0 ONLINE 0 0 0

c2t5000C5003EF0EBB8d0 ONLINE 0 0 0

c2t5000C5003EF0F507d0 ONLINE 0 0 0

c2t5000C5003EECE68Ad0 ONLINE 0 0 0

c2t5000C5003EEE7F2Ad0 ONLINE 0 0 0

spares

c2t5000C5003EF2D1D0d0 AVAIL

c2t5000C5003EEEBC8Cd0 AVAIL

c2t5000C5003EE49672d0 AVAIL

Setup email alerts:

OpenIndiana will have a default sendmail configuration which can send email to the internet via directly connecting to the destination mail port. Edit your /etc/aliases to add a meaningful destination for your root account and type newaliases after you have done your editing. Then follow this guide and setup email alerts to get notified when you lose a disk.

Snapshot current setup as a boot environment:

OpenIndiana boot environments allows you to snapshot your current system as a backup, so that you can always reboot your system to a known working state. This is really handy when you do system upgrades, or experiment with something new. beadm list shows the default boot environment:

root@openindiana:/home/garo# beadm list

BE Active Mountpoint Space Policy Created

openindiana NR / 1.59G static 2012-01-02 11:57

There we can see our default openindiana boot environment, which is both active (N) and will be activated upon next reboot (R). The command beadm create -e openindiana openindiana-baseline will snapshot the current environment into new openindiana-baseline which acts as a backup. This blog post at c0t0d0s0 as a lot of additional information how to use the beadm tool.

What to do when a disk fails?

The failure detection system will email you a message when the zfs system detects a problem with system. Here’s an example of the results when we removed a disk on the fly:

Subject: Fault Management Event: openindiana:ZFS-8000-D3

SUNW-MSG-ID: ZFS-8000-D3, TYPE: Fault, VER: 1, SEVERITY: Major

EVENT-TIME: Mon Jan 2 14:52:48 EET 2012

PLATFORM: X8DTH-i-6-iF-6F, CSN: 1234567890, HOSTNAME: openindiana

SOURCE: zfs-diagnosis, REV: 1.0

EVENT-ID: 475fe76a-9410-e3e5-8caa-dfdb3ec83b3b

DESC: A ZFS device failed. Refer to http://sun.com/msg/ZFS-8000-D3 for more information.

AUTO-RESPONSE: No automated response will occur.

IMPACT: Fault tolerance of the pool may be compromised.

REC-ACTION: Run ‘zpool status -x’ and replace the bad device.

Log into the machine and execute zpool status to get detailed explanation which disk has been broken. You should also see that a spare disk has been activated. Look up the disk id (c2t5000C5003EEE7F2Ad0 in this case) from the print.

NAME STATE READ WRITE CKSUM

tank ONLINE 0 0 0

raidz3-0 ONLINE 0 0 0

c2t5000C5003EF23025d0 ONLINE 0 0 0

c2t5000C5003EEE6655d0 ONLINE 0 0 0

c2t5000C5003EE17259d0 ONLINE 0 0 0

c2t5000C5003EE16F53d0 ONLINE 0 0 0

c2t5000C5003EE5D5DCd0 ONLINE 0 0 0

c2t5000C5003EE6F70Bd0 ONLINE 0 0 0

c2t5000C5003EEF8E58d0 ONLINE 0 0 0

c2t5000C5003EF0EBB8d0 ONLINE 0 0 0

c2t5000C5003EF0F507d0 ONLINE 0 0 0

c2t5000C5003EECE68Ad0 ONLINE 0 0 0

spare-10

c2t5000C5003EEE7F2Ad0 UNAVAIL 0 0 0 cannot open

c2t5000C5003EF2D1D0d0 ONLINE 0 0 0 132GB resilvered

spares

c2t5000C5003EF2D1D0d0 INUSE currently in use

c2t5000C5003EEEBC8Cd0 AVAIL

c2t5000C5003EE49672d0 AVAIL

Start the diskmap.py and execute command “ledon c2t5000C5003EEE7F2Ad0″. You should now see a blinking red led on the broken disk. You should also try to unconfigure the disk first via cfgadm: Type cfgadm -al to get a list of your configurable devices. You should find your faulted disk from a line like this:

c8::w5000c5003eee7f2a,0 disk-path connected configured unknown

Notice that our disk id in zpool status was c2t5000C5003EEE7F2Ad0, so it will show in the cfgadm print as “c8::w5000c5003eee7f2a,0″. Now try and type cfgadm -c unconfigure c8::w5000c5003eee7f2a,0 I’m not really sure is this part needed, but our friends at #openindiana irc channel recommended doing this.

Now remove the physical disk which is blinking the red led and plug a new drive back. OpenIndiana should recognize the disk automatically. You can verify this by running dmesg:

genunix: [ID 936769 kern.info] sd17 is /scsi_vhci/disk@g5000c5003eed65bb

genunix: [ID 408114 kern.info] /scsi_vhci/disk@g5000c5003eed65bb (sd17) online

Now start diskmap.py, run discover and then disks and you should see your new disk c2t5000C5003EED65BBd0. Now you need to replace the faulted device with thew new one: zpool replace tank c2t5000C5003EEE7F2Ad0 c2t5000C5003EED65BBd0. The zpool should now start resilvering the new replacement disk. The spare disk is still attached and must be manually removed after the resilvering is completed: zpool detach tank c2t5000C5003EF2D1D0d0. There’s more info and examples at the Oracle manuals which you should read.

As you noted, there’s a lot manual operations which needs to be done. Some of these can be automated and the rest can be scripted. Consult at least the zpool man page to know more.

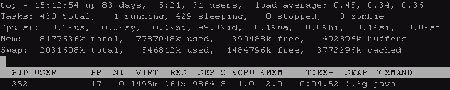

Benchmarks:

Simple sequential read and write benchmark against a 11 disks raidz3 in a single stripe was done with dd if=/dev/zero of=/tank/test bs=4k and monitoring the performance with zpool iostat -v 5

Read performance with bs=4k: 500MB/s

Write performance with bs=4k: 450MB/s

Read performance with bs=128k: 900MB/s

Write performance with bs=128k: 600MB/s

I have not done any IOPS benchmarks, but knowing how the raidz3 works, the IOPS performance should be about the same than one single disk can do. The 3TB Seagate Barracuda XT 7200.12 ST33000651AS can do (depending on threads) 50 to 100 iops. CPU usage tops at about 20% during the sequential operations.

Future:

We’ll be running more tests, benchmarks and watch for general stability in the upcoming months. We’ll probably fill the first enclosure gradually in the new few months with total of 44 disks, resulting around 85TB of usable storage. Once this space runs out we’ll just buy another enclosure, another 9205-8e HBA controller and start filling that.

Update 2012-12-11:

It’s been almost a year after I built this machine to one of my clients and I have to say that I’m quite pleased with this thing. The system has now three tanks of storage, each is a raidz3 spanning over 11 disks. Nearly every disk has worked just fine so far, I think we’ve had just one disk crash during the year. The disk types reported with diskmap.py are “ST33000651AS” and “WDC WD30EZRX-00M”, all 3TB disks. The Linux client side has had a few kernel panics, but I have no idea if those are related to the nfs network stack or not.

One of my reader also posted a nice article at http://www.networkmonkey.de/emulex-fibrechannel-target-unter-solaris-11/ – be sure to check that out also.